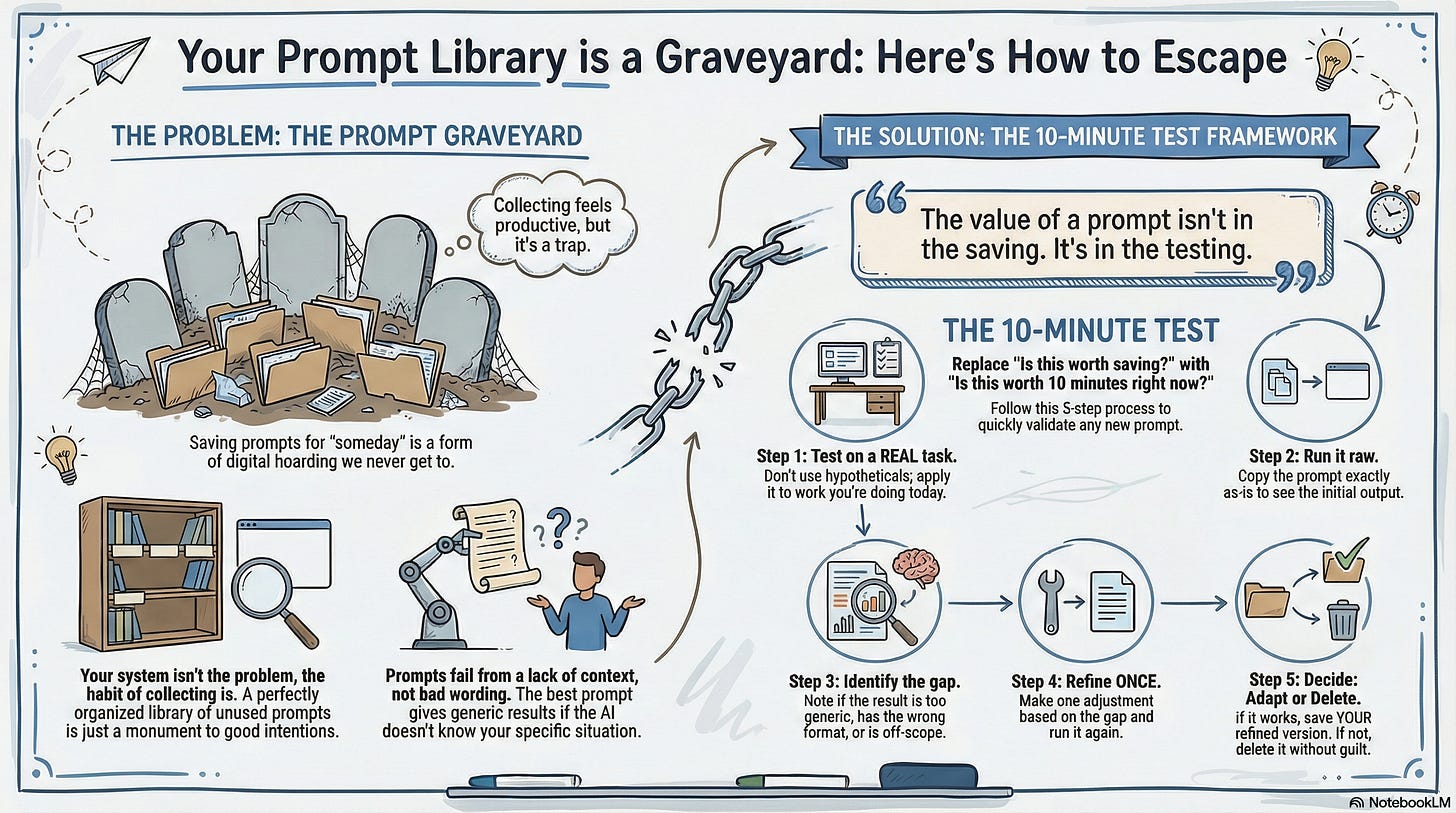

Your Prompt Library Is the New Swipe File Graveyard

Collecting prompts feels productive. It’s the same “someday” trap, just a different medium.

Last week, I got a prompt from The Neuron that looked genuinely useful. Instead of saving it, I tested it. Took ten minutes. Refined it for my actual workflow. Used it twice that day. Deleted the original.

That’s when it hit me: the prompt that works is the one you test today. The one you save for someday? That’s just a different kind of hoarding.

I didn’t always think this way. For months, I did what most people do. Subscribed to newsletters specifically for their prompts. Copied the good ones into a Notion database. Tagged them. Told myself I’d circle back when I had time.

My prompt library grew. My actual prompt usage didn’t.

Sound familiar?

We spent years accumulating free PDFs, swipe files, and courses we never finished. We told ourselves it was research. Preparation. Building a toolkit for someday.

Then AI showed up, and we started doing the exact same thing with prompts. Different medium. Same pattern. Same graveyard.

The database gets bigger. The “someday” pile grows. And the gap between collecting and actually using widens every week.

Why Your Prompt Organization System Isn’t the Problem

Most people think the problem is organization. They believe if they just had a better system... better tags, better folders, a smarter way to categorize prompts... they’d actually use them.

But here’s what I discovered: the system isn’t the problem. The collecting is.

Every time you save a prompt “for later,” you’re making a tiny decision to not engage with it now. And that decision compounds. One saved prompt becomes ten. Ten becomes fifty. And somewhere along the way, the act of collecting starts to feel like progress.

It’s not. It’s a preparation theater.

I know this because I lived it. My Notion database had prompts organized by category, use case, and even AI model. Beautiful system. I opened it maybe once a month.

Meanwhile, the prompts I actually used? They never made it into the database at all. I tested them in the moment, refined them, and moved on.

The library was a monument to good intentions. The real work happened outside of it.

If you keep building the collection, here’s what happens:

You spend more time organizing prompts than testing them

You forget why you saved half of them in the first place

You feel behind even though your database is full

You mistake the size of your library for readiness

The uncomfortable truth? A smaller prompt habit that you actually use beats a massive library you admire from a distance.

What I Learned When I Stopped Saving Prompts

I realized my prompt library wasn’t helping me when I went looking for something I knew I’d saved. Took me fifteen minutes to find it. Then another ten to remember why I thought it was useful. By the time I actually tested it, I’d burned almost half an hour on a prompt that didn’t even work for my situation.

That was my wake-up call.

The system I built to save time was costing me more than it saved.

This is the part when I realized.

I already had everything I needed to use prompts effectively. I just had it backwards. I was treating prompts like collectibles when they’re actually disposable tools. You don’t save a sticky note forever. You use it, then throw it away.

What if I treated prompts the same way?

Put this to the test.

Instead of randomly saving prompts gained for others, I put it to the test first:

No more saving by default. When I saw a promising prompt, I tested it immediately or let it go.

10-minute rule. If I couldn’t test and evaluate a prompt in 10 minutes, it wasn’t worth my time right now.

Refine or delete. After testing, I either adapted it for my specific workflow or deleted it entirely. No “maybe later” pile.

One active prompt per category. Instead of fifty options for summarization, I kept one that actually worked.

What surprised me was how little I missed. The prompts I let go? I never thought about them again. The ones I tested and refined? I used them repeatedly.

After a few weeks, something shifted.

“The value of a prompt isn’t in the saving. It’s in the testing.”

I stopped being beholden to new prompts. I stopped opening newsletters with the thought of “I should save this.” Instead, I read them with a simple filter: Is this useful to me right now? If yes, test it. If no, move on.

My prompt “library” shrank to maybe a dozen. My actual prompt usage doubled.

This pattern applies beyond prompts

It’s the same trap with tools, templates, frameworks, and tactics. We collect because collecting feels safe. Testing feels risky. What if we pick wrong? What if there’s a better one?

But here’s what I learned the hard way: the prompt you tested and refined for your workflow will always outperform the “perfect” prompt you saved but never touched.

The gap isn’t between good prompts and bad prompts. It’s between tested and untested.

The 10-Minute Framework That Replaced My Prompt Database

Why “Save for Later” Was Costing You

Every prompt you saved without testing created a small decision debt. You told yourself you’d evaluate it eventually. But eventually never came, and now you have a database full of untested possibilities and no clear sense of which ones actually work.

Which means you’re no closer to using AI effectively than you were before you started collecting.

Here’s what changed for me: I stopped asking “Is this worth saving?” and started asking “Is this worth ten minutes right now?”

The 10-Minute Prompt Test

When you encounter a new prompt, run it through this filter before you do anything else:

Test it raw. Copy the prompt exactly as written. Run it with a real task you’re working on today. Not a hypothetical. Something actual.

Note what breaks. Where did the output miss? Too generic? Wrong format? Missing context about your situation?

Refine once. Make one adjustment based on what broke. Add your context, change the output format, and narrow the scope. Run it again.

Decide: use, adapt, or delete.

If it worked after one refinement, save your version (not the original)

If it needs heavy modification, it’s not the right prompt for you right now. Delete it.

If it flopped completely, delete it without guilt.

Total time: 10 minutes. You now know more about that prompt than you would after six months in a database.

Two Prompts Worth Testing Today

Here are two from The Neuron that passed my filter. Don’t save them. Test them.

The “Compare and Decide” Prompt:

Compare [Option A] vs [Option B] for [my situation].

Output a table with: features, tradeoffs, risks, cost/time, best-fit.

Then give a recommendation with clear reasoning and "if X, choose A; if Y, choose B."

Research and show any hidden costs.

Include a short 'What would make me regret this?' section for each option, and end with a 3-step next action plan (trial, pilot, or due diligence).

Give a confidence level regarding how likely it is to be the right choice given what you know about my needs today and me as a person.

Best for: Tool decisions, vendor comparisons, build vs. buy choices.

Downside: Requires clear context about your situation upfront or the output stays generic.

The “Give Me Options” Prompt:

Generate 10 distinct approaches to solve [problem].

For each: give a one-line summary, best use case, and one downside.

Then recommend the top 2 based on [my priority: speed / cost / quality / risk].

Best for: When you’re stuck on approach and need to see the landscape quickly.

Downside: Can feel overwhelming without a clear priority filter.

The Prompt You Keep

After testing, your saved prompts should meet one criteria: you’ve used them at least twice on real work.

Not “this looks useful.” Not “I might need this.” You’ve tested it, refined it, and it delivered.

Everything else is decoration.

The Real Reason Most Prompts Fail (It’s Not the Prompt)

When I talk about using AI and LLMs, my clients often feel frustrated because their results are usually… off.

They believe the problem is finding (copying) the right prompt. The magic combination of someone else's ideas that unlocks useful output.

But here’s what I’ve noticed: the prompts aren’t the issue. The context is.

That “Compare and Decide” prompt above? It works beautifully when AI knows your situation, your priorities, and your constraints. Run it cold, with no context? You get generic output that requires heavy editing.

This is why prompt collecting fails. You’re gathering tools without giving them anything to work with. It’s like collecting recipes but never stocking your kitchen.

The shift happens when you stop hunting for better prompts and start giving AI better context. A clear description of their business. Their audience. Their voice. Their constraints.

Suddenly, even simple prompts work harder.

My prompt library got smaller. But my context documents got richer. That’s what actually moved the needle.

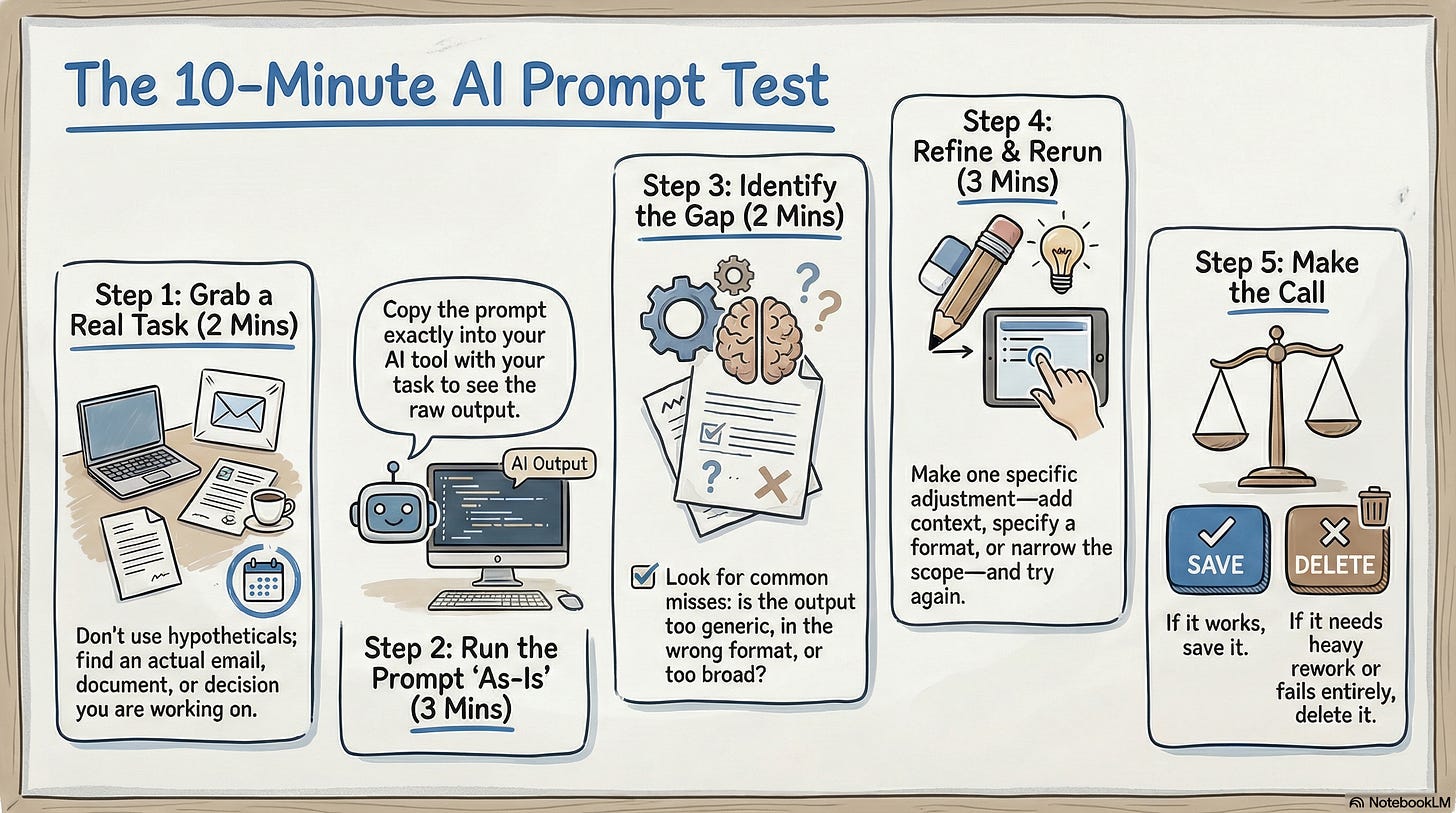

How to Evaluate Any Prompt in 10 Minutes

Here’s the smarter approach, step by step.

The Old Way: Save prompt. Tag it. Tell yourself you’ll test it later. Open your database in three months and wonder why you saved half of what’s there.

The Smarter Way: Test immediately. Refine once. Decide now.

The Exact Process

Step 1: Grab a real task (2 minutes)

Don’t test prompts with hypotheticals. Find something you’re actually working on today. An email you need to write. A decision you’re weighing. A document you’re drafting.

Step 2: Run the prompt raw (3 minutes)

Copy it exactly. Paste it into your AI tool with your real task. See what happens without modification.

Step 3: Identify the gap (2 minutes)

Where did the output miss? Usually it’s one of three things:

Too generic (needs your specific context)

Wrong format (needs output structure specified)

Wrong scope (needs narrower focus)

Step 4: Refine and rerun (3 minutes)

Make one adjustment. Add context about your business. Specify the format you need. Narrow the scope. Run it again.

Step 5: Make the call

Works after one refinement? Save your refined version.

Needs heavy rework? Not the right prompt for you right now. Delete it.

Flopped entirely? Delete without guilt.

You just learned more in 10 minutes than you would in six months of saving.

TLDR Version

Collecting prompts feels productive, but creates the same “someday” pile as PDFs and courses

The value of a prompt is in the testing, not the saving

10 minutes of real testing beats six months in a database

Prompts fail because of missing context, not because they’re the “wrong” prompt

Keep only what you’ve used twice on real work. Delete everything else.

You don’t need a bigger prompt library.

You need a clearer filter for what’s worth your time and a better context to make any prompt work harder.

The prompts you test today will always outperform the collection you admire from a distance.

So here’s my question: What’s one prompt you’ve been “saving for later” that you could test in the next 10 minutes?

One More Thing

If you noticed the real issue here... that prompts fail because of missing context, not missing prompts... you’re ready for the next step.

Next week, I’m running a workshop: Teach AI Your Business Once.

In this working session, you’ll build a simple Context Library for your business. Core Identity, Voice & Tone, and Audience documents you can drop into any AI tool.

Instead of starting from scratch every time, you’ll leave with infrastructure that makes AI sound more like you and need far less editing.

No more prompt hunting. No more “someday” piles. Just context that makes everything work better.

I’m horrified to learn this is a thing.

Be a goddamn human instead.